There are three different ways to load your custom DAG files into the Airflow chart. Remember to replace the REPOSITORY_URL placeholder with the URL of the repository where the DAG files are stored. Next, execute the following command to deploy Apache Airflow and to get your DAG files from a Git Repository at deployment time. Follow these steps:įirst, add the Bitnami charts repository to Helm: helm repo add bitnami The first step is to deploy Apache Airflow on your Kubernetes cluster using Bitnami's Helm chart. Step 1: Deploy Apache Airflow and load DAG files If you use the example repository provided in this guide, clone or fork the whole repository and then, make sure that you move the airflow-dag-examples folder content to a standalone repository. To successfully load your custom DAGs into the chart from a GitHub repository, it is necessary to only store DAG files in the repository you will synchronize with your deployment. This example repository contains a selection of the example DAGs referenced in the Apache Airflow official GitHub repository. This guide assumes that you already have your DAGs in a GitHub repository but, if you don't, you can use this example repository.

AIRFLOW KUBERNETES EXECUTOR POD TEMPLATE CODE

You have already created custom DAGs and have the source code in a GitHub repository.You have the kubectl command line ( kubectl CLI) installed.You have a Kubernetes cluster running with Helm (and Tiller if using Helm v2.x) installed.This process is the same that you should follow in case you want to introduce any change in your DAG files.

AIRFLOW KUBERNETES EXECUTOR POD TEMPLATE HOW TO

In addition, you will learn how to add new DAG files to your repository and upgrade the deployment to update your DAGs dashboard.

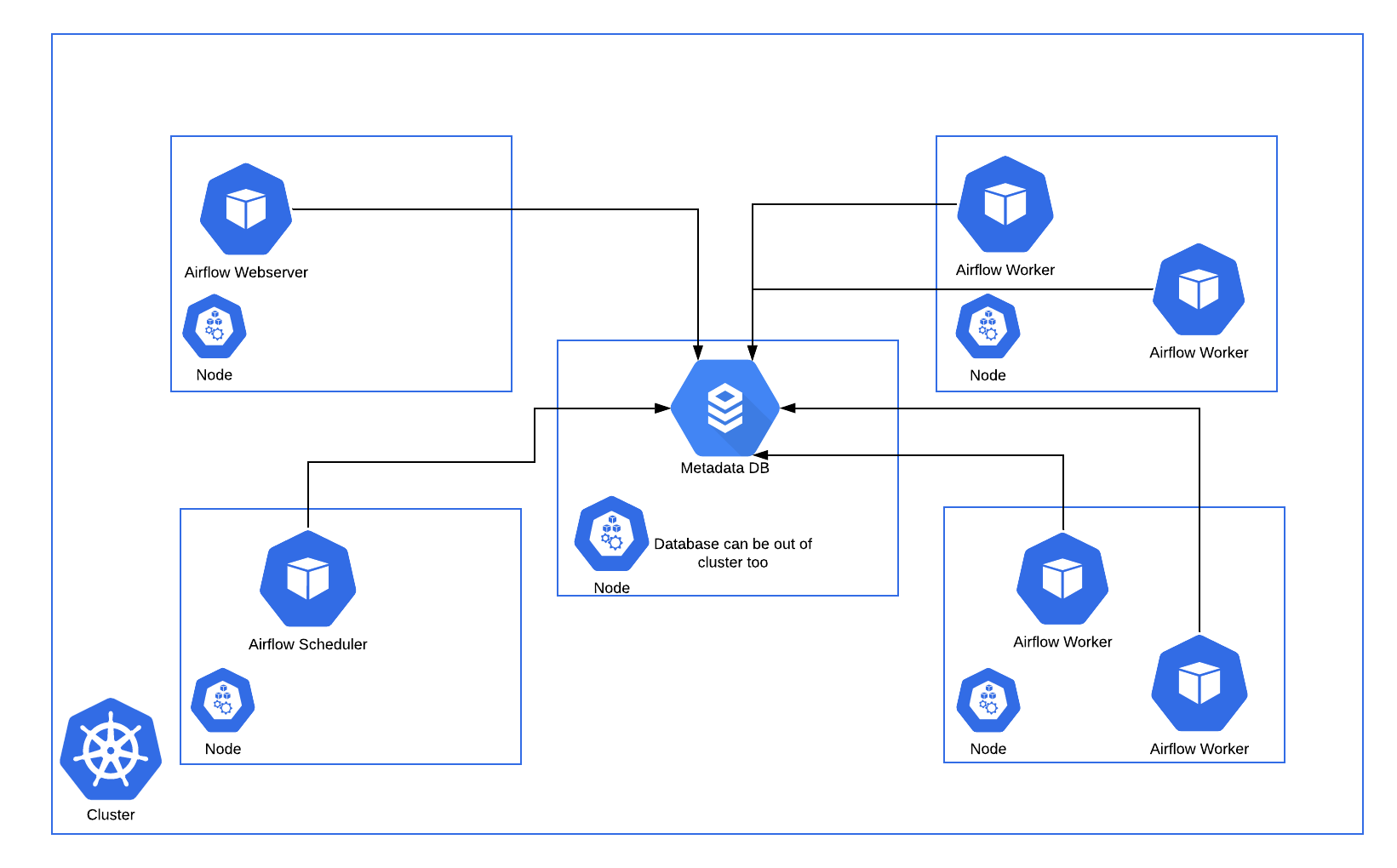

This tutorial shows how to deploy the Bitnami Helm chart for Apache Airflow loading DAG files from a Git repository at deployment time. DAG files can be loaded into the Airflow chart. Users of Airflow create Directed Acyclic Graph (DAG) files to define the processes and tasks that must be executed, in what order, and their relationships and dependencies. You can add more nodes at deployment time or scale the solution once deployed. To make easy to deploy a scalable Apache Arflow in production environments, Bitnami provides an Apache Airflow Helm chart comprised, by default, of three synchronized nodes: web server, scheduler, and workers. Apache Airflow is an open source workflow management tool used to author, schedule, and monitor ETL pipelines and machine learning workflows among other uses.

0 kommentar(er)

0 kommentar(er)